by Matt Zimmerman

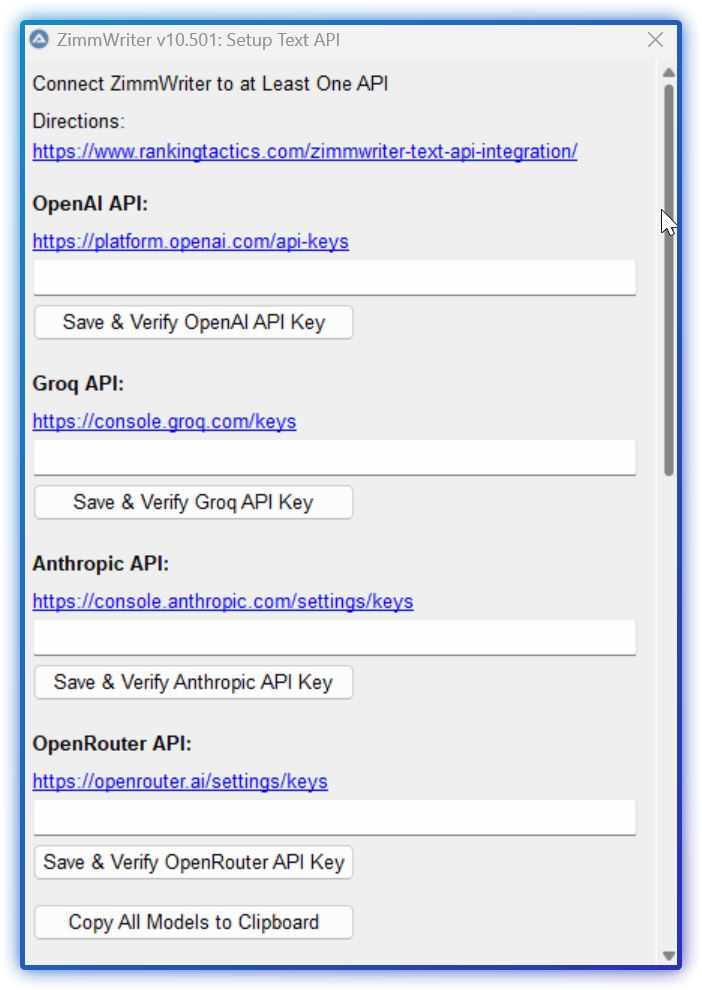

ZimmWriter’s incredibly flexible when it comes to choosing your AI platform for article writing. You can pick AI models from these providers: OpenAI (OR), Anthropic (ANT), Groq (GROQ), or OpenRouter (OR).

Each has its pros and cons, but ZimmWriter lets you unlock all of them inside the software! Once you’ve saved and verified one of their API keys, you’ll see their models in the AI model selection dropdown within ZimmWriter.

One thing to note: ZimmWriter was originally built on OpenAI’s models. Each model has its own quirks and issues. Although I’ve tried to patch around things that produce unexpected outputs, you might still run into glitches in the blog posts when using these models. So, make sure you review your articles carefully.

Here is the location in the ZimmWriter options menu to add these additional API keys:

OpenAI’s models are the tried-and-true champions. They’re working wonders inside ZimmWriter since it was built around their models.

Head on over to OpenAI’s website and sign yourself up.

After you sign up, you’ll need to add a credit card for a billing method. Next you’ll need to add some credits to your account. I recommend $50 since that will unlock higher speeds in your account.

Finally, once you’ve done this, you can generate an API key with OpenAI.

Anthropic, like OpenAI, is a company that provides AI models which ZimmWriter can use to write blog posts.

First, head over to Anthropic’s website and sign up to receive an API key.

Upon creating an account, you’ll automatically enter the free tier. Like OpenAI, Anthropic enforces usage and rate limits. To quickly advance to tier 2, which is ideal for running a single ZimmWriter license, simply add $50 to your account. Although the usage and rate limit page mentions a 7-day waiting period, I was immediately upgraded, so you might have similar luck.

If you need to run multiple licenses of ZimmWriter on different computers, consider moving up to higher tiers to accommodate extensive use of Anthropic’s Claude models.

On the Anthropic website, you’ll also find an option to generate an API key. Once you have it, go to the ZimmWriter options menu and click ‘Setup Text API (Non-OpenAI)’. Enter your key there, and you’re all set.

At the time of writing this article, Anthropic offers three models that ZimmWriter can currently utilize.

You can find specific pricing for these models on Anthropic’s pricing page. Each model’s pricing is listed for both ‘input’ tokens and ‘output’ tokens.

What are input and output tokens?

When you interact with the AI, we need to input your request, consuming input tokens, and then the AI responds, consuming output tokens.

However, the prices on the page are listed in millions of tokens—for example, Claude 3 Sonnet costs $15 per million output tokens. But how does this translate to the cost of writing articles?

It’s challenging to determine precisely because the cost varies significantly depending on the settings you choose for writing your articles. Generally, a rough estimate is that one million tokens for input and output combined could produce about 50-75 bulk writer articles, each with 10 H2 headers, FAQs, no H3 headers, no SERP scraping, and no custom outlines.

Groq’s another company whose AI models you can use. The main difference is that Groq’s models are Facebook’s open-source models. Currently, the only model powerful enough to run ZimmWriter’s prompts is the LLaMA3 70b model.

You can register for an account and get your API key on Groq’s API page.

Now the really amazing thing (at least at the time of writing this) is that you can use Groq’s API for free right now! Soon it’ll switch to a paid mode, but for now, it’s free.

But… (there’s always a but).

The free plan is rate limited, so it’s going to go slower than OpenAI or Anthropic’s models. But when it goes paid, it should be the fastest generator on the market.

The only model capable of running ZimmWriter prompts is LLaMA3 70b.

People rank the quality of LLaMA3 70b higher than GPT4 Turbo but slightly below GPT4.

Now, you might be wondering why you can’t simply run this open-source model developed by Facebook on your personal computer for free.

That’s an excellent question.

I’ve tested the LLaMA3 8b model, which has 8 billion parameters and should technically run on most computers, but it fails to handle the prompts, producing unusable results. Therefore, we must rely on the 70 billion parameter model.

However, the LLaMA3 70b model requires 34GB of GPU RAM, exceeding the 24GB maximum found in consumer-grade graphics cards. I’m uncertain about using SLI configurations, but that’s likely beyond what most users possess.

Running it on PC RAM isn’t viable either due to memory bandwidth limitations.

You might think that M-series Mac computers, with their superior memory bandwidth, could be a solution. Why not run it there using ZimmWriter on Parallels or Wine?

Another great question.

Unfortunately, that approach also falls short. Even my M2 Max with 96GB RAM struggles with the LLaMA3 70b model, making it impractically slow. Plus, how many of you have M-series Macs with over 64GB of RAM?

For now, Groq remains an excellent alternative.

Even when Groq starts charging, they’ve indicated their pricing will be competitive with or less than OpenAI’s GPT 3.5.

OpenRouter is the latest addition to ZimmWriter, and boy oh boy, is it powerful!

You can use OpenRouter’s API key to connect to multiple models. It saves you the hassle of getting different API keys and helps if you’re in a country where OpenAI (or one of the other AI model providers) is banned.

Just head on over to the OpenRouter website, create an account, seed it with some credits, and make an API key.

OpenRouter has a bunch of models, but I’ve only selected certain ones for ZimmWriter that can handle its prompts.

These models can change anytime, as OpenRouter might remove them from their platform.

Prices are also variable, but they usually don’t go up. The price shown in the text files generated by ZimmWriter is only as accurate as the last check on the OpenRouter servers. ZimmWriter checks the price when you first start it or change your API key, so keep that in mind.

Note: Some models such as Perplexity Online have an addition charge per number of requests (e.g., $5 per 1,000 requests). The charge is not reflected by ZimmWriter in the text file. So keep this in mind and monitor your OpenRouter account.

The information provided on this website is provided for entertainment purposes only. I make no representations or warranties of any kind, expressed or implied, about the completeness, accuracy, adequacy, legality, usefulness, reliability, suitability, or availability of the information, or about anything else. Any reliance you place on the information is therefore strictly at your own risk. Read more in my terms of use and privacy policy. You can also contact me with questions.